GSC Position: Don’t make this mistake

The “Position” metric in Google Search Console is unique. Because it is calculated differently to the position you get in all other tools. And many people actually work with this metric incorrectly.

Difference between field data vs. lab data

A brief explanation of the difference: In all other tools, you generally receive lab data. Here, for example, the crawler comes by once a day and measures the results – in other words, artificially as in the lab.

With the GSC, however, you receive field data. The position displayed is therefore your average of all the positions where you have actually been seen by real users. So you could say it is the “real” position and therefore particularly valuable for SEO analyses.

So what is the mistake?

The position can be determined in the GSC at 3 levels: at keyword, URL and domain level. In the latter two cases, however, the position is too aggregated and therefore often not meaningful.

So if you see that the average position of a domain or an individual page is falling, this does not necessarily mean anything negative. In fact, it can even be positive!

Example: You rank with your domain for a keyword on position 1. If you now also rank for a second keyword, but then on position 10, your average position drops from 1 to 5 (with the same impressions for both keywords).

This means that you are dropping sharply, even though your visibility has increased significantly.

How do you work correctly with the position metric?

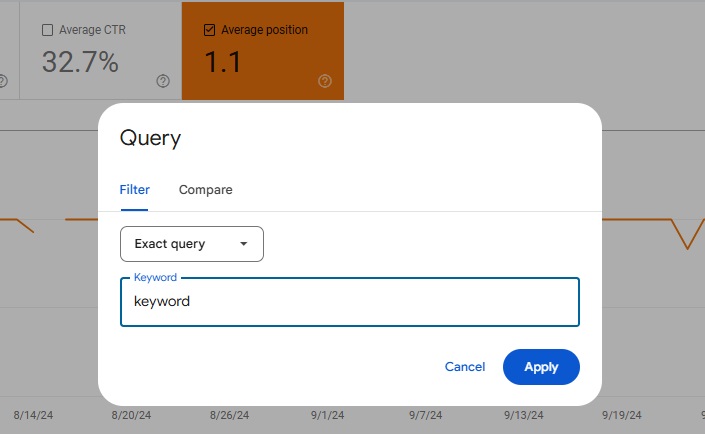

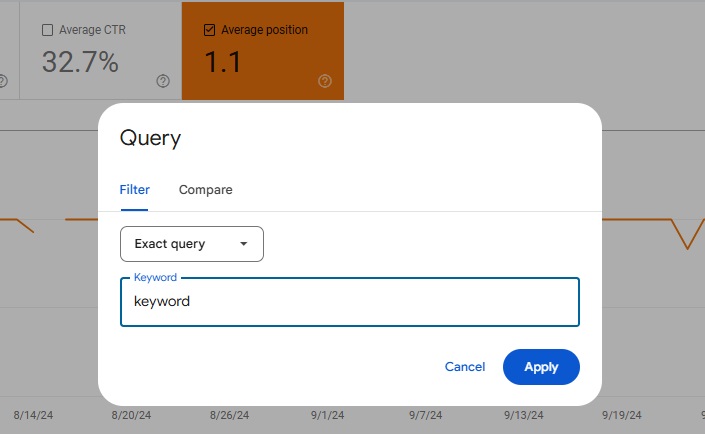

You can certainly work with the position of the Search Console if you only refer to a single keyword. So either if you are working in the “Keyword” tab or if you have set a filter for a keyword (and are then working at page level, for example).

In this context, the position is one of the best key figures of all and can or should also appear in reports.

Is it otherwise not allowed to be used?

On domain or page level you should use this metric with caution. It is your first indicator that something is going wrong. So if there are strong fluctuations, you should check why and then report this instead of the fluctuation itself.

The “Position” metric in Google Search Console is unique. Because it is calculated differently to the position you get in all other tools. And many people actually work with this metric incorrectly.

Difference between field data vs. lab data

A brief explanation of the difference: In all other tools, you generally receive lab data. Here, for example, the crawler comes by once a day and measures the results – in other words, artificially as in the lab.

With the GSC, however, you receive field data. The position displayed is therefore your average of all the positions where you have actually been seen by real users. So you could say it is the “real” position and therefore particularly valuable for SEO analyses.

So what is the mistake?

The position can be determined in the GSC at 3 levels: at keyword, URL and domain level. In the latter two cases, however, the position is too aggregated and therefore often not meaningful.

So if you see that the average position of a domain or an individual page is falling, this does not necessarily mean anything negative. In fact, it can even be positive!

Example: You rank with your domain for a keyword on position 1. If you now also rank for a second keyword, but then on position 10, your average position drops from 1 to 5 (with the same impressions for both keywords).

This means that you are dropping sharply, even though your visibility has increased significantly.

How do you work correctly with the position metric?

You can certainly work with the position of the Search Console if you only refer to a single keyword. So either if you are working in the “Keyword” tab or if you have set a filter for a keyword (and are then working at page level, for example).

In this context, the position is one of the best key figures of all and can or should also appear in reports.

Is it otherwise not allowed to be used?

On domain or page level you should use this metric with caution. It is your first indicator that something is going wrong. So if there are strong fluctuations, you should check why and then report this instead of the fluctuation itself.

Julian has been working as a full-time SEO since 2016. As a consultant, he has supported over 100 clients and specialised primarily in data analysis. The Google Search Console was always at the centre of this.

Julian has been working as a full-time SEO since 2016. As a consultant, he has supported over 100 clients and specialised primarily in data analysis. The Google Search Console was always at the centre of this.